AI-Detective

This project explores how user research methods support iterative divergence and synthesis to uncover valuable design insights. The final output is a mini-game prototype that helps college students better understand AI bias and use generative AI more mindfully.

Timeline

Jan 2025 - May 2025

Project

Course project

Responsibilities

UX Research

Prototyping

Interaction Design

User Interface Design

Tools

UX Research methods

Figma

Challenge

AI bias was a relatively unfamiliar topic for us as researchers at the start. Additionally, our user research showed that most college students lack strong motivation to learn about it. The challenge was to balance raising awareness while keeping users engaged without causing frustration or boredom.

Opportunity

Although we were new to AI bias, prior user research from our instructor provided valuable insights into the topic and user attitudes. Through iterative ideation and testing, we gained further user insights that helped ensure the practicality of our final design.

OVERVIEW

The Process

1

Preliminary research

Background Research

Data Analysis

Heuristic Evaluation

Usability Testing

2

Synthesis

Walking the wall

Reframe & Define

3

Contextual Research

Preparation

Conduct & Analysis

4

Modeling

Affinity Clustering

Modeling

Research Report

Speed Dating

5

Deliverable Reflection

Assumption Artifacts

Elevator Pitch

Course Relection

PRELIMINARY RESEARCH

Background Research

01. Overall approach

In the background research phase, I conducted 2 experiential and 5 informational studies, from which I synthesized six key insights and completed a Context & Change Worksheet. Click here to view the full research report.

02. Insights

- Feedback could partly improve results

Feedback can indeed help optimize the generated results, but the effectiveness and ease of providing feedback are relatively poor.

- Lack of tools

Users need more accessible tools to help identify potential biases during their usage and provide quick feedback.

- Showing AI's thinking and sources can aid in spotting bias

Displaying the thought process and information sources behind generative AI can help users more easily identify potential bias issues.

- Frequent audits reduce ethical problems

Regular audits can help increase awareness of bias issues and offer valuable data to enhance generative AI.

- Marginalized group

Currently, generative AI's focus on bias issues within marginalized groups primarily targets the LGBTQ community, people of color, and women, with a lack of attention to disabled communities.

- Stereotypes in bias

Bias discussions often focus on race and current topics, overlooking other biases such as cultural and language bias.

03. Context & Change Worksheet

PRELIMINARY RESEARCH

Data Analysis

01. Oveview

We analyzed survey data collected by the WeAudit team, including our instructor Dr. Hong Shen. The survey (N = 2,197) explores how individuals’ identities, experiences, and levels of knowledge influence their ability to detect harmful algorithmic biases, particularly in the context of image search. Our analysis focused on three primary identity dimensions—race, sexuality, and gender—along with intersecting factors such as education level. Click here to view the full data analysis report.

02. Visualization

I was responsible for researching how sexual orientation influences the perception of AI bias within our team. I ultimately chose to use a bar chart for visualization, as it clearly illustrates the differences in opinions among various groups.

Overall, regardless of sexual orientation, approximately 50% of people perceive the content as harmful, while the other 50% do not. Additionally, the heterosexual group perceives the least harm from the image, with only 27.73% of them considering the content to be harmful.

03. Takeaways

Insights

-

People are more likely to perceive biases in areas that pertain to themselves.

-

People tend to recognize bias when there are familiar over-representations or under-representations present within the results of a query.

Hypothesis

-

Certain demographics of people identify specific algorithmic biases (ie. racial, gender, etc.) in GenAI more accurately than their peers.

-

Certain algorithmic biases (ie. sexuality) in GenAI are more difficult for people to identify, necessitating different methods for their identification.

PRELIMINARY RESEARCH

Heuristic Evaluation

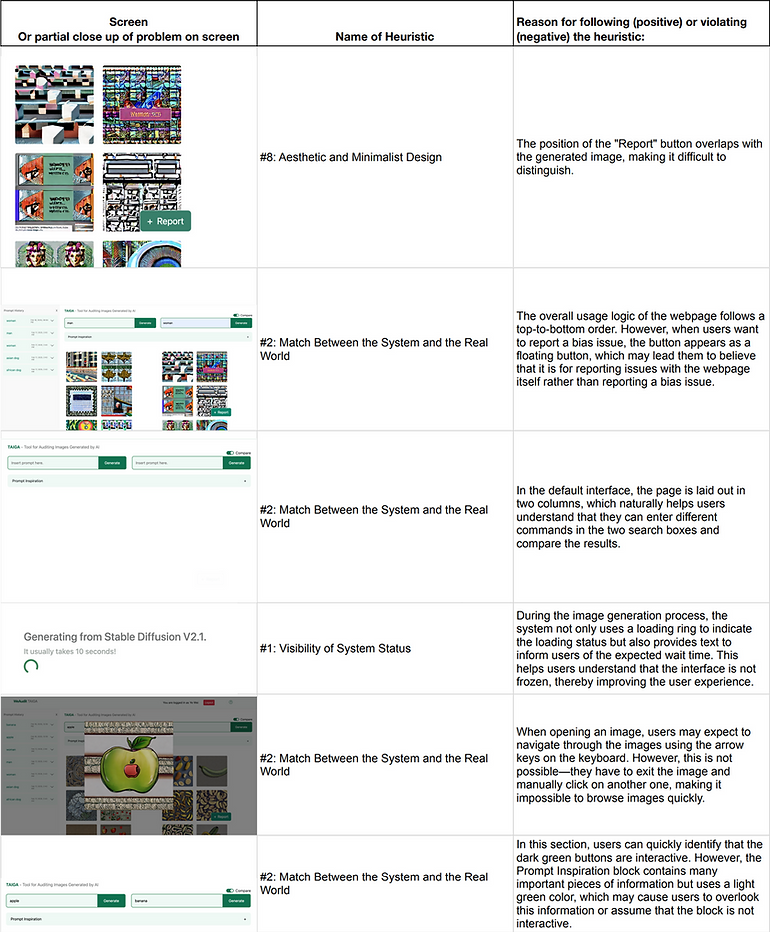

02. My Heuristic Evaluation Findings

PRELIMINARY RESEARCH

Usability Test

01. Oveview

Following the heuristic evaluation, the goal of our usability testing was to evaluate WeAudit’s intuitiveness for first-time users and the speed at which they understood its core features. We employed the Think-Aloud protocol to capture user feedback. Click here to view the full usability testing plan and click here to see the whole usability test report.

02. My Usability Test Findings

SYNTHESIS

Walking The Wall

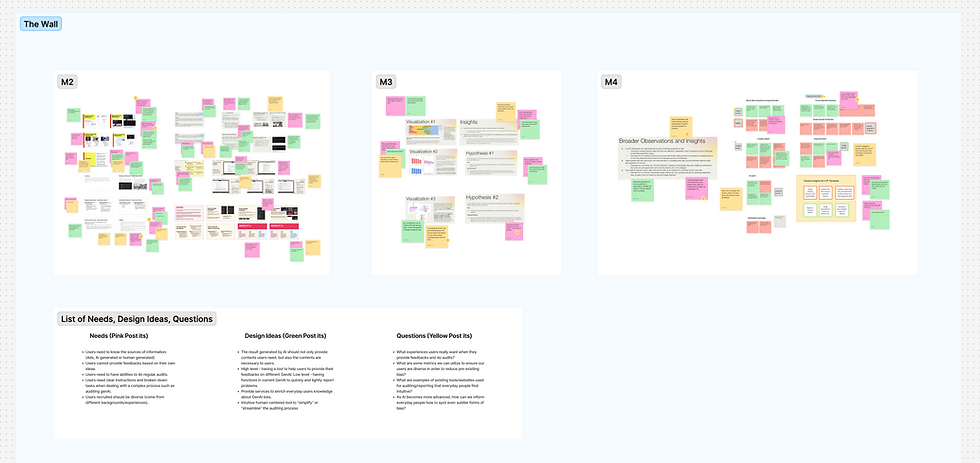

We conducted Walking the Wall to re-immerse ourselves in the data and analyses we had completed throughout the project. We walked through selected outputs from our background research, findings from our data analysis, and high-level insights from our heuristic evaluation and usability testing. Click here to see the detail.

.png)

SYNTHESIS

Reframe & Define

01. Reframing Activity

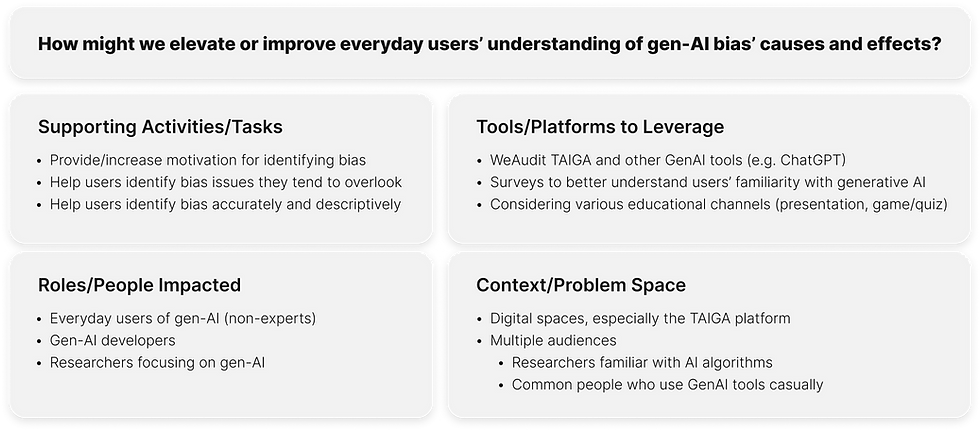

We chose the Reverse Assumptions method because we shared similar mental models of the generative AI bias problem space. To break out of our conventional thinking, we believed that intentionally challenging and flipping our assumptions would help us generate a broader and more diverse set of potential approaches for our project.

Our future research will focus on enhancing everyday users’ understanding of generative AI bias, increasing transparency within AI systems regarding potential biases, and creating more opportunities for users to provide detailed feedback on bias issues.

02. Define The Project

CONTEXTUAL RESEARCH

Preparation

01. Overview

Before conducting the experiment, we first drafted a research guide, which included the introduction, consent script, consent form, research goals, our chosen methods (selected from think-aloud, contextual inquiry, and directed storytelling), the experimental procedure, and interview questions.

To ensure the feasibility of the study plan, we conducted a pilot test and refined the research guide based on the feedback and results.

02. Draft Research Guide

We chose the think-aloud protocol to better understand users’ thought processes when interacting with a gen-AI tool. By placing users in realistic scenarios, we can closely observe how they respond to current gen-AI technologies. The main challenge will be designing effective scenarios and asking timely, insightful questions. Click here to see full draft research guide.

03. Pilot Test

The structure of the experiment is well-designed and facilitates strong participant engagement. However, some questions were too general, resulting in superficial answers, and should be removed. Additionally, allocating more time to interviews rather than the Think-Aloud Protocol is recommended, as interviews tend to provide deeper and more meaningful insights. Click here to see feedback from pilot test.

03. Final Research Guide

In the final research plan, the experiment was optimized based on feedback from each participant in the pilot test, with a greater focus on users’ feedback after using the AI tool. Additionally, qualified participants were prepared for the formal experiment.

Our goals are to better understand:

- How familiar users are already with potential bias in gen-AI content

- What aspects of gen-AI bias users may be unaware of

- Users’ ability to label/identify gen-AI bias

- Strategies to improve users’ ability to identify gen-AI bias

Core questions:

- How well can everyday users identify algorithmic bias already?

- What factors affect a user’s ability to detect algorithmic bias?

- What are potential ways to improve users’ ability to identify bias?

CONTEXTUAL RESEARCH

Conduct & Analysis

01. Conducting Session

Based on the planned procedure, I carried out the formal experiment and summarized the following key findings.

1. Users prioritize output accuracy over fairness or diversity

2. Bias is acknowledged but not internalized as a problem

3. Bias perception depends on result, not the prompt itself

4. Limited motivation to change or question the system

5. Curiosity about AI mechanisms could be a potential entry point

02. Interpretation Notes

Upon completing our individual experiments, we held a group discussion to interpret our notes and align our findings. We then synthesized our insights into a shared spreadsheet for further analysis. Click here to see the spreadsheet.

MODELING

Affinity Clustering

01. Create and Group Notes

We used FigJam to synthesize and group the notes from our Interpretation Notes. From this process, we identified different themes and represented them using color-coded labels.

02. Top-Level Themes

Through iterative clustering, we ultimately organized the notes into three main themes:

- Initial expectations and beliefs about generative AI systems and algorithmic bias

- Processing and perceiving algorithmic bias in generative AI

- Approaches to increasing awareness of bias

MODELING

Modeling

01. Personas

Modeling is another form of research synthesis that allows us to explore our data from different perspectives. We chose to create personas as a way to reframe our notes, because although we had decided to focus on college students as our target users, we observed significant differences in their levels of understanding and attitudes toward generative AI. Developing personas helped us capture these variations and better tailor our design decisions to distinct subgroups within the college student population.

02. Journey Mapping

In addition, we chose to create a journey map because we found that users’ motivation plays a crucial role in reducing AI bias. This tool allowed us to identify how motivation shifts across different stages of the user experience, revealing new design opportunities along the way.

MODELING

Research Report

After conducting affinity clustering and analyzing our data through personas and journey mapping, we gained more actionable insights and further refined our research goals and methods. Click here to see the research report.

High Level Insights:

1. A users’ ability to identify bias is inherently linked to their understanding of discrimination, bias, and prejudice.

2. There is a common belief that AI tasked with menial or non-creative tasks won’t produce biased consequences.

3. User could benefit from a better understanding of the development and operations behind Gen-AI models when identifying algorithmic biases.

4. Distrust arises in generative AI, especially on human-centric topics, due to lack of transparency, single-perspective responses, and inherent skepticism.

5. Bias detection in generative AI is influenced by external factors and improves when bias is a focal point of discussion.

MODELING

Speed Dating

01. Overview

In the Speed Dating process, we validated user needs and values and identified conceptual risk factors through a series of steps, including Walk the Wall, Crazy 8s, concept selection, storyboard creation, and Speed Dating sessions.

02. Walk the Wall

We conducted another round of Walk the Wall to organize additional notes and experimental data, resulting in a list of insights, user needs, and questions.

03. Crazy 8s

To generate ideas that focus on the needs and values of future users based on our research, we conducted a Crazy 8s session. We rapidly sketched storyboards to communicate the user insights we uncovered and to promote discussion around people’s desires, values, and needs.

User Needs:

1. Desire to learn more about AI bias

2. Desire to learn inner workings of AI systems (including source of results)

3. Multiple outputs in gen-AI results

4. Examples to demonstrate AI bias

5. Quick, intuitive, and engaging delivery method

03. Speed Dating

Starting from the needs identified during the Crazy 8s session, we designed safe, risky, and crazy-risky concepts for each need and visualized them through storyboards. We then conducted Speed Dating sessions with users to gather feedback on these concepts.

Click here to read the full Speed Dating report.

New Design Opportunities:

- More diverse views for displaying AI results

- Provide users with options to modify the details of AI-generated results.

- A simple and quick mini-game about AI bias.

Common Misunderstandings:

- The extent to which users need more bias education

- Distinction between incorrect answers and biased answers

- Bias reported by the user vs. the platform

DELIVERABLE

Assumption Artifacts

01. Assumption Artifact Proposal

Each member of our team individually envisioned an Assumption Artifact to help address our current research question. From our own perspectives, we identified the riskiest assumption, honest signal, measures, as well as the participants and setting.

My proposal is to add a bias-check button within generative AI systems. By clicking this button, users can review their generated content and clearly identify different types of potential bias. I believe this approach strikes a balance between supporting user motivation and ensuring functional effectiveness. Click here to see my proposal.

02. Assumption Artifact & Testing Plan

Our team consolidated all of our individual proposals and selected one direction to move forward with based on a group vote. We then created a prototype to conduct further testing. Click here to read the testing plan.

We created a low-fidelity Figma prototype of a mini-game embedded in ChatGPT. Before accessing the platform, users play as a crocodile investigator assessing fairness in marsh entry decisions. The goal is to spark interest and help users better understand bias in generative AI.

03. Test Result

We used both quantitative and qualitative methods to analyze the data. We tested with undergraduate students using a think-aloud protocol and collected follow-up data via a Google Form. Click here to see the test result.

Users found the game easy but lacking in nuance, and some were confused about its context and feedback design. Moving forward, we plan to improve clarity, add feedback regardless of answer correctness, provide game context upfront, and introduce more complex bias scenarios. These insights will guide our next design iteration.

04. Elevator Pitch

College students—especially in the age of generative AI—value speed and efficiency. When learning something new, they often turn to gamified platforms like Duolingo rather than traditional textbooks. To support this behavior, we created a short, engaging educational mini-game embedded within ChatGPT to help everyday college students improve their foundational understanding of generative AI bias.

REFLECTION

Course Reflection

As a designer, I learned many valuable user research methods through the UCRE course. For example, the think-aloud protocol gave me deeper insights into users’ thoughts on our prototype, while speed dating proved to be an efficient method for both generating and evaluating design solutions.

This class really helped me see the broader value of storyboards. Previously, I only used them to present design ideas, but now I understand how they can also be used in speed dating sessions to effectively evaluate those ideas.